Heroku Dynos: Sizes, Types, and How Many You Need

Adam McCrea

@adamlogicHeroku makes it effortless to deploy our web apps when we're just getting started, but anyone who's scaled an app on Heroku knows that there are still lots of decisions to make. Topping the list are "which dyno type should I use?" and "how many dynos do I need?"

Photo by Victoriano Izquierdo / Unsplash

If you're feeling overwhelmed or confused, you're not alone. It's not always clear which dyno type is best and how many we need, but by the end of this article, we'll all be experts.

If you just need a quick tl;dr:, here it is:

Standard-2x dynos offer the best combination of price and performance for most apps. If you encounter memory quota warnings on 2x dynos, you should make the jump to Performance-L. For either dyno type, autoscaling is the only way to know how many dynos you should be running.

How did I land on that recommendation? Let's dig in.

What’s ahead

Heroku dyno types

Heroku offers six "Common Runtime" dyno types. These are often referred to as dyno "sizes" since the more expensive ("larger") dynos typically offer more memory and CPU.

Heroku also offers "Private" and "Private Shield" dynos, which offer increasing levels of security compliance. The performance characteristics of these dyno types are almost identical to their Common Runtime counterparts though, so we'll focus on the six Common Runtime dyno types.

How are the dyno types different?

A few important supplemental notes to the comparison chart above:

- Monthly dyno cost from left to right: $5 (Eco), $7 (Basic), $25 (Standard-1x), $50 (Standard-2x), $250 (Perf-M), $500 (Perf-L).

- Eco, Basic, and Standard-1x dynos are identical performance-wise, but Heroku imposes some feature limitations on Eco and Basic. More on these limitations below.

- The two Performance-level dynos run on dedicated hardware. The four Standard dynos run on shared hardware, where we're susceptible to "noisy neighbors" and somewhat ambiguous processing power (Heroku does not explain what the "Compute" metric represents).

With that as our high-level view, let's go into each dyno type in detail.

Eco dynos

Eco dynos automatically shut down during periods of inactivity and can only run for a limited number of hours per month. These limitations make Eco dynos a poor fit for production applications. The Eco plan provides enough hours to run a single dyno continuously for a month, but if we need a worker dyno for background processing (most apps do), we will not have enough Eco dyno hours.

Eco dynos are for great for demos, experimentation, and perhaps a staging app.

Basic dynos

Basic dynos are identical in performance to Standard-1x dynos, and they don't have the limited hours and automatic shutdown constraints of Eco dynos. Sounds great, right?

The catch is a limitation in how we scale our dynos. We can run multiple Basic dynos if they're different process types (web and worker dynos, for example), but we can't run multiple dynos of the same type. This means that if we ever need to scale to multiple web dynos, Basic dynos are not an option.

Even if a single Basic dyno is sufficient for our current traffic, running a production app on a single dyno is risky. Remember that Eco, Basic, and Standard dynos run on shared architecture. This means a "noisy neighbor" (more on this below) can slow our app down. Or maybe an unexpected spike in traffic has saturated our dyno. There are many ways a dyno can enter a "bad state", and running on a single dyno is a single point of failure.

Later on we'll go deeper on how many dynos to run, but for now let's rule out Basic dynos since they prevent having any redundancy in our web dynos.

Standard-1x Dynos

Standard-1x dynos are the first of the "professional" dynos—dynos that don't have any feature restrictions upon them. Feature restrictions aside, these dynos are identical to Eco and Basic dynos. I know I've said that multiple times, but it bears repeating.

I've also mentioned that Standard dynos run on shared hardware, making them susceptible to noisy neighbors—other tenants running on the same hardware that are consuming more than their fair share of resources. Noisy neighbors on Standard dynos are a real thing, and they're tough to detect and mitigate.

That doesn't rule out Standard dynos altogether, though. At a fraction of the price of Performance dynos, we can run many more Standard dynos for the same cost, helping mitigate possible performance issues caused by noisy neighbors.

The real problem with Standard-1x dynos is memory. With a limit of 512 MB, many apps will exceed that quota with even a single process. Running multiple processes per dyno is critical, and we're going to take a little digression to discuss why.

Heroku routing and "in-dyno concurrency",

When a user requests a page on our web app, Heroku's router decides which of our web dynos receives the request. Ideally, the router would know how busy each dyno is, and it would give the request to the least busy dyno.

But that's not how it works.

Heroku's router uses a random routing algorithm. It doesn't care about the size of the request, the path of the request, or how busy each web dyno might be. This means our dynos will inevitably receive an unfair share of large or slow requests, at least some of the time. It also means that if a web dyno can only process a single request at a time, we've introduced a dangerous bottleneck into our system.

If we have no "in-dyno concurrency", a single slow request can cause other requests to back up inside the dyno. This is when we start to see request queue time increase. It's a combination of not running enough web dynos, and those dynos not having sufficient concurrency.

No matter how many dynos we run, it's critical that each dyno can process multiple requests concurrently. In Ruby, this means running multiple web processes—usually Puma workers. Running multiple threads doesn't cut it. Ruby threads do provide a bit of concurrency (especially when there's a lot of I/O), but due to the GVL, it's not true concurrency.

Multiple processes on a Standard-1x dyno

This is an important point when considering a Standard-1x dyno, because most Rails apps will consume far more than 512 MB when running multiple Puma workers.

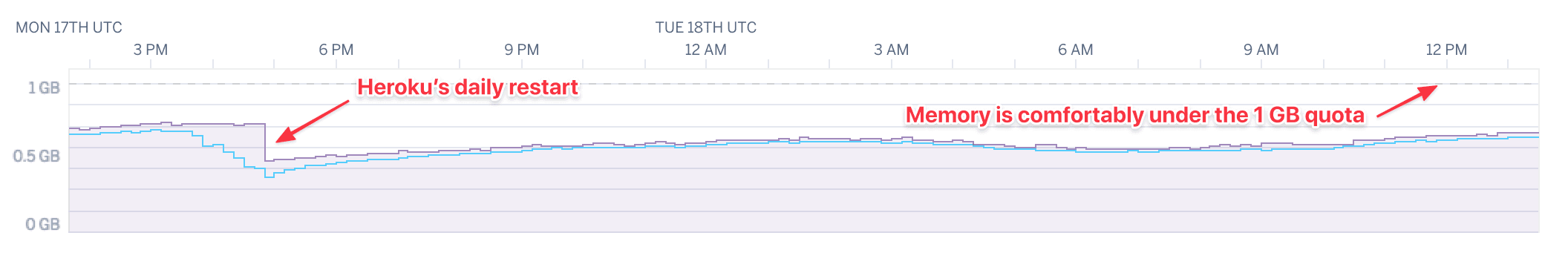

An easy way to test this is to run your app for 24 hours on Standard-2x dynos (which we'll discuss next) with 2 Puma workers. Usually this is accomplished by setting WEB_CONCURRENCY to "2", and ensuring that you've uncommented the "workers" line in your Puma config. Check your memory usage on your Heroku dashboard, and you should see it start to level off after a few hours (it's normal for it to increase initially).

For most of us, this experiment will show 2 processes consuming somewhere between 500-1000 MB. Since running at least 2 processes is a must, and Standard-1x dynos are limited to 512 MB, Standard-1x is rarely a viable option.

Pro tip: One way to decrease memory usage for Ruby apps on Heroku is by using the Jemalloc buildpack.

Standard-2x dynos

Standard-2x dynos are identical with Standard-1x, with double the memory and CPU at double the price.

If you're wondering how a single Standard-2x dyno is any different than two Standard-1x dynos, it's all about the memory and concurrency. While most apps are constrained by memory to a single process in a 1x dyno, the 2x dyno opens up the possibility of running multiple processes.

Compare these scenarios:

- Two 1x Dynos running 1 web process: Heroku routes requests randomly between the two dynos. Inevitably it will make bad decisions, sending requests to a busy dyno when another is available for work.

- One 2x Dyno running 2 web processes: The web processes will balance the requests coming into the dyno, ensuring that an available process always gets the next request. Same cost, better concurrency.

Standard-2x dynos make in-dyno concurrency possible while costing a fraction of Performance dynos. That's why I recommend them for most apps.

But not all apps can squeeze multiple processes onto a 2x dyno. Rails apps that consume 1 GB or more memory with two processes are not uncommon, and for those apps there's Performance dynos.

Performance-M dynos

Perf-M dynos are the first of two "Performance" dyno options. Looking at the cost and performance characteristics relative to other dyno types, we can see there's not a lot of value here.

- Perf-M dynos have less than one fifth the memory of Performance-L dynos at half the cost.

- Perf-M have only 2.5x the memory of Standard-2x dynos at 5x the cost.

Not much more to say about these. They're just a bad deal.

Performance-L dynos

Perf-L dynos cost $500/month per dyno—10 times the cost ($50/month) of Standard-2x—but we get what we pay for. With a 14 GB memory quota, we can easily run several app processes in a single dyno.

Performance dynos run on dedicated architecture, so we don't have to worry about noisy neighbors. With Standard dynos, performance differences can be noticeable between dynos, such as after restarting or deploying. Performance dynos don't have this issue. The performance is very consistent from one dyno to the next.

Still, the performance variability with Standard dynos can be mitigated by running more of them, so I only recommend Perf-L dynos for apps that are memory-constrained on 2x dynos.

How many dynos?

Once we've selected a dyno type, the next logical question is "how many of them?" Let's run a quick calculation.

We'll assume an example app that takes 100ms to process each request. This translates to a capacity of 10 requests per second without any concurrency. If we're running two processes per dyno, this takes us to a capacity of 20 requests per second per dyno.

We can look at Heroku's throughput chart so see how many requests per second the app receives. Let's assume this example app maxes out at 200 requests per second.

The math is straightforward: If each dyno has a capacity for 20 requests per second and the app is going to receive 200 requests per second, we’ll need 10 dynos. Here's the formula:

The only problem is that this example app doesn't exist.

Dyno calculations in the real world

If we looked at a real app instead of a hypothetical app, we'd see some stark differences:

- Response times are not consistent. Some endpoints are slow, some are fast.

- Requests don't show up evenly. We might get a burst of 1,000 requests in one minute, then just a trickle of traffic for the next 10 minutes.

- Traffic patterns change throughout the day, week, and year.

Attempting to use the calculation above in a real-world app is fraught with error. I've been there and felt the pain. We're bound to find ourselves in one of these scenarios:

- We under-provision our dynos, and our app struggles to keep up. Requests are queueing because we don't have enough capacity to serve them, and our users experience slow page requests. Unsure of how to fix the problem, we crank up the dynos. Now we're in the next scenario.

- We over-provision our dynos, and our app is performing just fine. Unfortunately, we're paying for extra capacity we don't need. This can be really frustrating, and it leads to the many claims of Heroku being too expensive.

So how can we determine how many dynos to run, having confidence that our app will stay fast, without paying any more than necessary?

Automation is the answer

Instead of trying to manually calculate something that's changing every second, let's make software do it for us. That's exactly what an autoscaler does: it continually calculates how many dynos are needed right now based on live metrics.

We built an autoscaler that does this better than anything out there, but this isn't a sales pitch for Judoscale—it is a pitch for autoscaling in general. There's just no reason to do it the hard way.

Every production app should have autoscaling in place. Even if your production app receives little traffic and runs fine on a single dyno, that dyno is a single point of failure. Running a single dyno without autoscaling is an invitation for slowdowns at best, a production outage at worst. Think of it as a low-cost safety net.

Autoscaling on Heroku is easy and cheap. With it we avoid the painful battles of being under-provisioned, and we avoid paying for unnecessary capacity when over-provisioned.

Putting it all together

We started with six dyno types, and narrowed them down to two: Standard-2x and Performance-L.

I recommend all apps try Standard-2x dynos first, running two app processes per dyno. If the 1 GB memory quota proves insufficient for your app, then make the jump to Perf-L. (Or better yet, find out why your app is consuming so much memory!)

Regardless of dyno type, always set up autoscaling on production apps. The cost of this setup will rival any non-Heroku option, and it'll run smoothly for years with little manual intervention.