How to Fix Heroku's Noisy Neighbors

Jon Sully

@jon-sullyIf you've had any kind of production-tier application running on Heroku with a moderate (or higher) level of traffic for the last several years, you've probably experienced Heroku's noisy neighbors issues. A total phantom, this problem is difficult to track, invisible to most monitoring, hard to nail down as a root-cause, and a pain in the butt for the aforementioned reasons! "Why is my app responding slowly to some requests even after we've put months of development time into making our end-points more efficient!?" "Why does everything run totally smooth on a Perf dyno but choppier and slower on Std? We don't want to pay for Perf long-term!" We've heard plenty of these stories. We've even experienced them ourselves! And, to make things even more complicated, Heroku doesn't reveal much about their architecture or how resources are shared... so when they made changes to that system in the last year, it only added complexity to the timeline. Heroku's Noisy Neighbors. Let's talk about it.

First, let us give you some context into where our findings come from. We (Adam and Jon) are the Judoscale team — two developers helping to solve capacity issues and keep your app's alerts at bay with autoscaling. Judoscale is the biggest and most capable autoscaling add-on available in the Heroku ecosystem; we're processing well over three billion metrics per day across all kinds of different apps and configurations on the platform. And okay, we hear you, hand-wavy metrics with big numbers don't actually mean anything without context and proper units — I'm not trying to be a sales person here! The point here is that we process enough data from Heroku dynos that we're able to abstract some interesting insights about the platform itself and its health. Now, we're not the IANA watching traffic patterns across the entire federated DNS system of the internet (nor are we statistics specialists), but we do see enough Heroku-specific data to capture what happens when Heroku quietly rolls out changes to some of their performance tooling!

Noisy Neighbors Defined

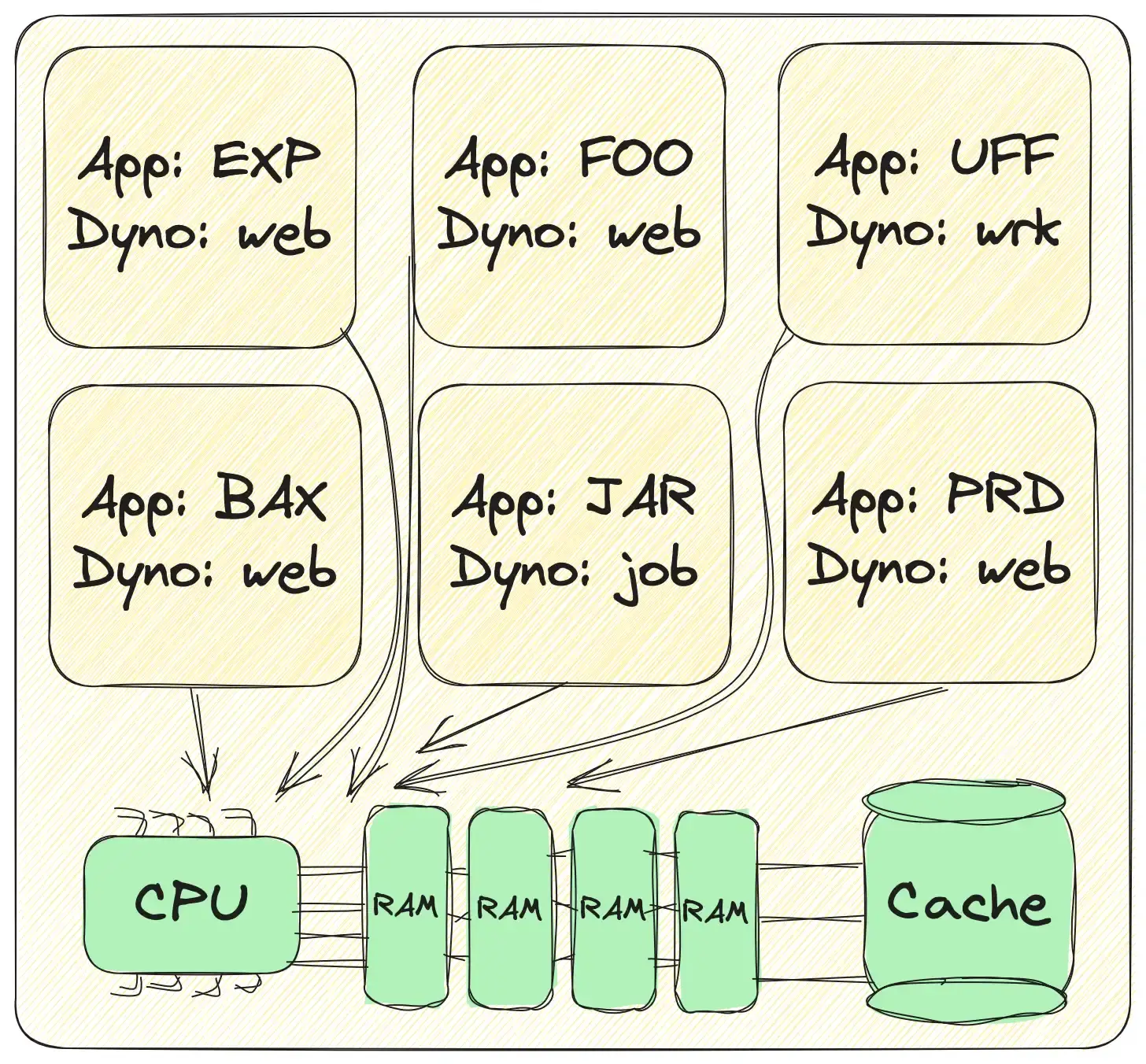

We won't spend too long here since most veteran Heroku users should be familiar with the problem at hand, but the idea is this: Heroku 'dynos' are really just containers — like Docker containers. As with all all container-based infrastructure, you have many virtual boxes (the containers) sharing the underlying physical resources of a singular machine (the server). Generally, we call this resource-sharing (novel, right?). It looks like this:

And if that looks busy, it's because it is! Each of the dynos is truly fighting for resources on the underlying host. They all want the CPU all the time; they all want all the memory. Heroku's job is to try to grant each container an equal share of the pie.

But in reality, perfect sharing of physical resources between virtual hosts is algorithmically and programmatically impossible. This is one of those deep computer-science, algorithmic proof, NP-complete-etc type problems, so we're not going to go any deeper here, but understand the implications of this statement. Noisy neighbors on shared hosts are inevitable to some degree. There is simply no way to perfectly share resources across many virtual hosts when each virtual host is constantly changing in how many resources it needs, uses, and holds... the chaos:

But, that doesn't mean we can't minimize the impact and severity of noisy neighbors! We can even use tooling to fix it when it happens! Hold that thought.

Noisy Neighbors Today

As of August 2023, Heroku noisy neighbors remain an issue that many apps face but Heroku has made some changes recently. As we noted before, Heroku doesn't reveal much about their architecture or load balancing algorithms at all. We have no special access here as Heroku add-on developers (though we wish we did) — but Heroku did allude to some changes in this space a few months ago:

We previously allowed individual dynos to burst their CPU use relatively freely as long as capacity was available... This is in the spirit of time-sharing and improves overall resource utilization by allowing some dynos to burst while others are dormant or waiting on I/O.

Some customers using shared dynos occasionally reported degraded performance, however, typically due to “noisy neighbors”

To help address the problem of noisy neighbors, over the past year Heroku has quietly rolled out improved resource isolation for shared dyno types to ensure more stable and predictable access to CPU resources. Dynos can still burst CPU use, but not as much as before.

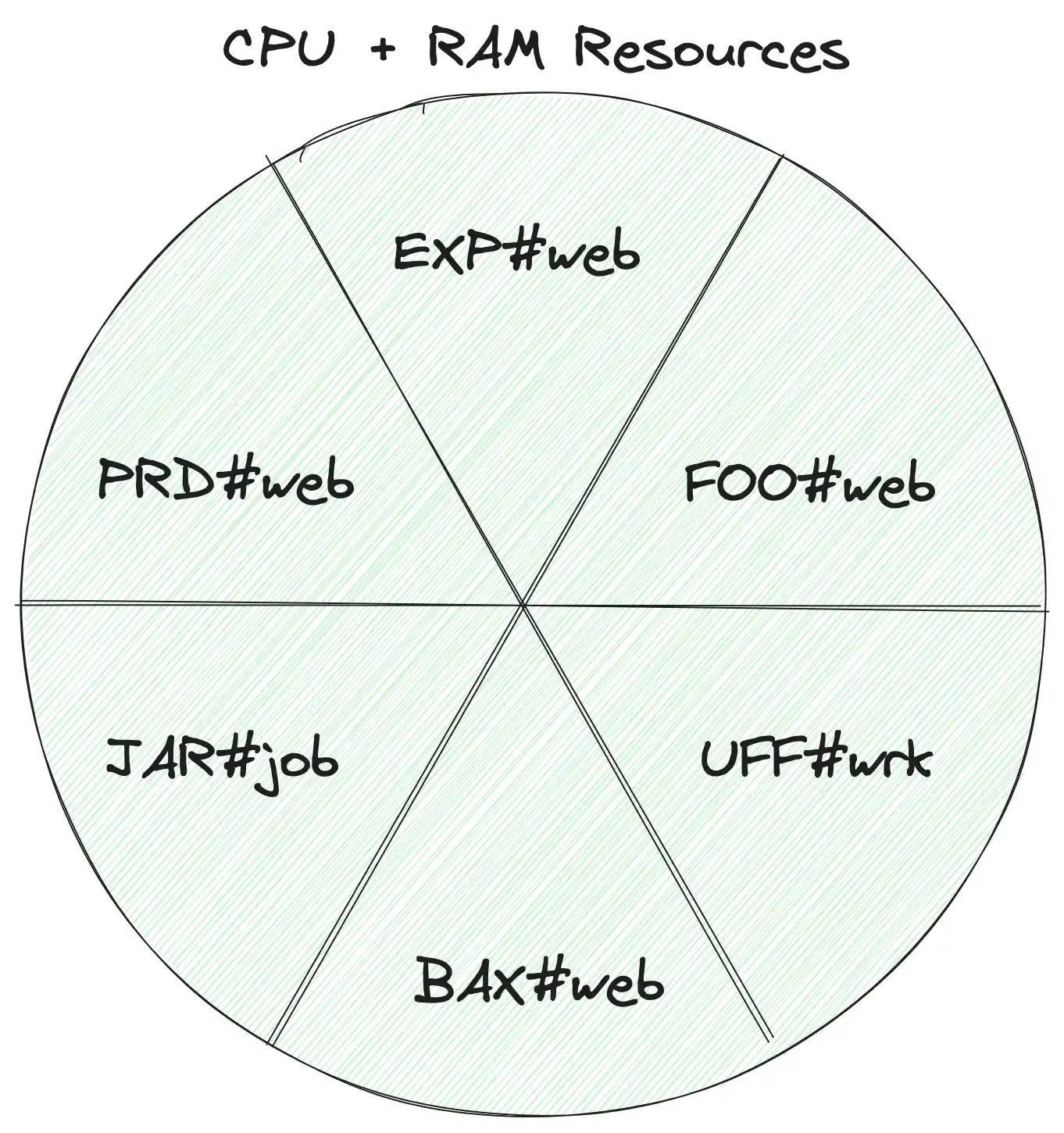

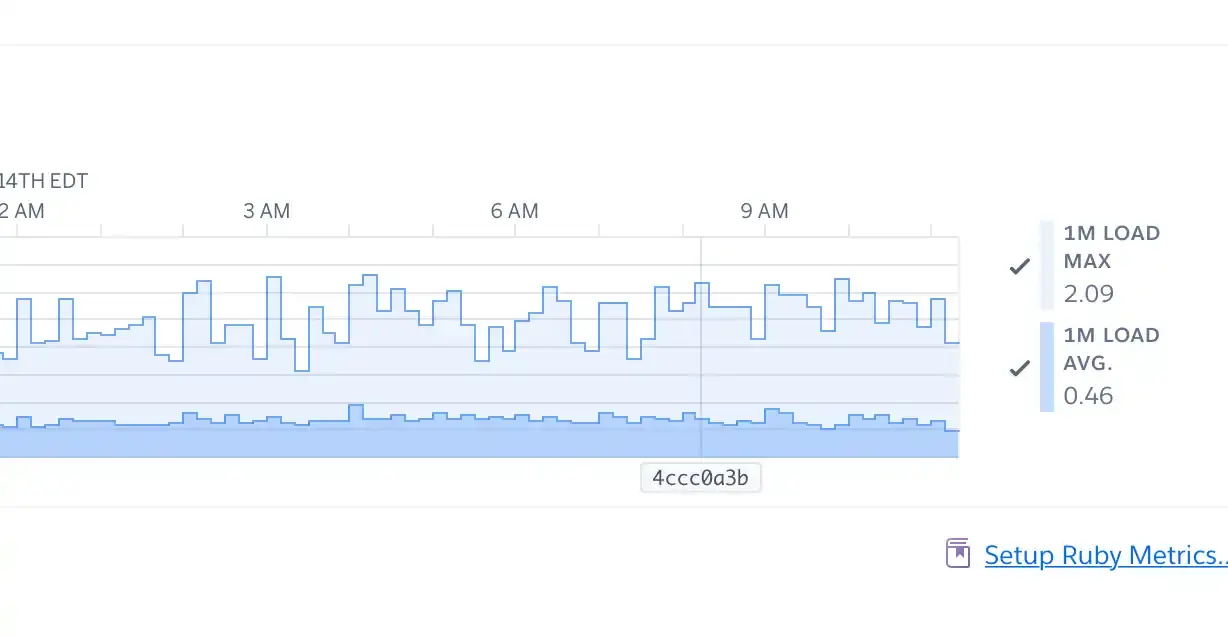

And we did actually see those changes impact apps on the platform — including ours. Put in more succinct terms, Heroku is now clamping down on apps that attempt to utilize more resources than they're paying for. This is all about dyno load:

But hold that thought too! The key takeaway from these changes is that your application now needs to keep a closer eye out on its load. Heroku will slap your wrist throttle your dynos quicker than years prior!

Do You Have Noisy Neighbors?

If you're having capacity, dyno, or response time issues, it's possible you're running into noisy neighbors. It's also possible that you're the noisy neighbor and Heroku is throttling your dyno(s) accordingly.

So first, and a big note here, is that you should always begin performance evaluations elsewhere. Noisy neighbors are not the first thing to point to for a slow application. You should only continue down this track if you know that your endpoints are performant, your scale is appropriate (as in, your queue time is very low), your requests aren't triggering slow queries... or hundreds of queries per request (!), and all of your monitoring tools show normal stats in every typical way. If you've confirmed all of those criteria but still have inexplicably high response times sporadically, you could have a noisy neighbor problem.

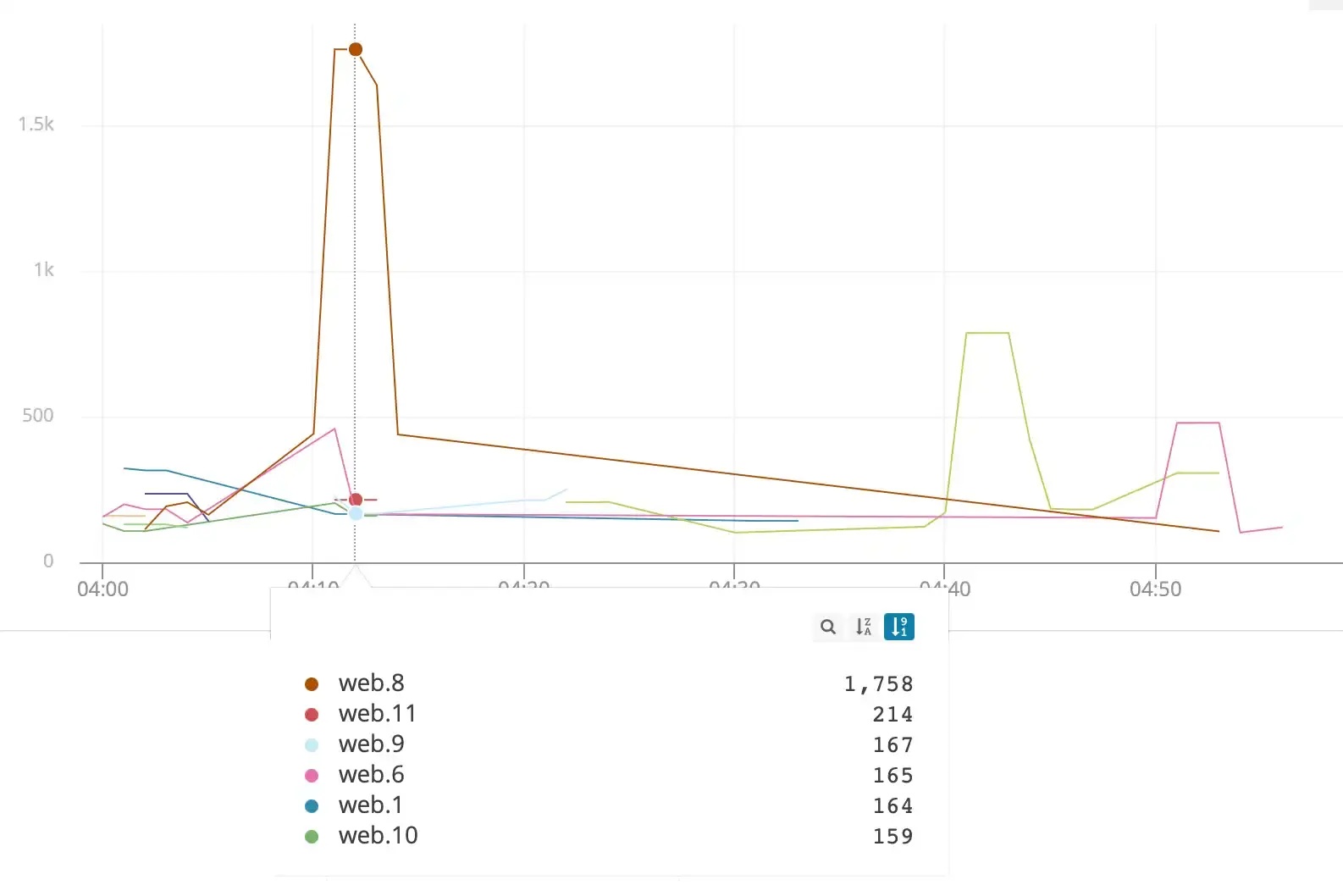

A good place to start is with the Dyno Load metric chart that Heroku exposes in each app's "Metrics" tab (see image above). This is one of very few monitors Heroku exposes for an app to know how much resource-pie it's consuming across all of the hosts its various dynos are running within. If you're running Free, Hobby, or Standard dynos and seeing a 1M LOAD MAX breaching 2.0 or a 1M LOAD AVG above 1.0 during the history of the chart, your app is likely getting throttled. You're getting reduced dyno performance because your app is the noisy neighbor!

To really know if someone else is the noisy neighbor, you'll need more specialized tooling. Some Application Performance Monitoring tooling may allow you to break down some metrics per dyno. This is super helpful to see if your issues are really only on a single dyno.

Since only one dyno in the group is behaving slowly here, it's very likely that this dyno is actually the victim of a noisy neighbor. While our chart shows that the dyno recovered fairly quickly (hold that thought), a dyno experiencing neighbor noise tends to stay in a hurt-performance state for a long period of time — often many hours. Since many APM tools don’t even offer by-dyno metrics breakdowns, this situation can end up in a frustrating guessing game when most other metrics look totally normal! Not all dynos are equal 😬.

Fixing Your Noisy Neighbors Problem

Remember above where we said that sharing resources perfectly across virtual hosts is actually impossible? That's still true. It is impossible to slice the pie perfectly for each host. But would you notice if the pie was only 1% or 2% off at any given time? Of course not! We don't need to fix noisy neighbors — we need to optimize, mitigate, and scale. Optimize our infrastructure to be resilient against noisy neighbor issues, mitigate noisy neighbors when they inevitably pop up, and scale dynamically to handle our required loads!

Oh, and it is worth mentioning — throwing money at the problem can work well for noisy neighbors. If you're willing to pay for them (though we don't always recommend them), perf dynos are built around dedicated hardware and will not have noisy neighbors (there are no neighbors)! It can sometimes be worth a quick switch to perf dynos for a few hours just to see if your application's metrics stabilize, too. That's another helpful indication that what you're facing on the std dynos is indeed noisy-neighbor-related.

Optimization

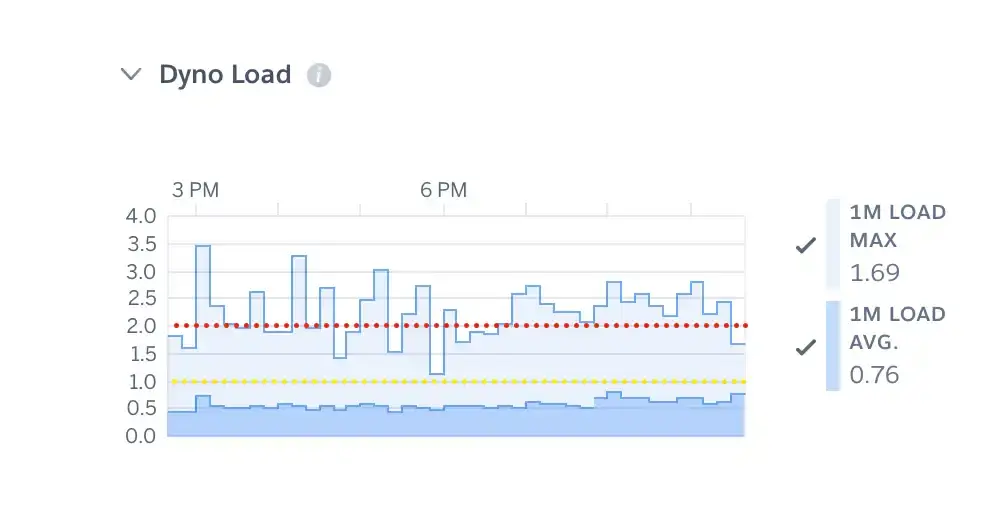

On the optimizing front, it's all about Dyno Load. Let's take another look at that chart. Heroku does tell us that Free, Hobby, and Standard dynos should not exceed a load average of 1.0. That may mean a different volume of requests since Std-1x dynos have a different quantity of resources than Std-2x, but the chart and average load value scale accordingly. Further, while Heroku doesn’t offer any more specific advice than,

if your dyno load is much higher than the number listed above, it indicates that your application is experiencing CPU contention

We can tell you, through both experience and platform observation, that what they really mean is “if you are hitting a 1M LOAD MAX of 2.0 (or higher), that dyno will be throttled.” Your app is essentially pushing for too much CPU in these cases. To put it visually,

The dark blue area (average load) shouldn’t exceed the yellow line and the light blue area (maximum load) shouldn’t exceed the red line. If your app’s maximum load does briefly exceed the red line (as this app does) you’ll likely be throttled, but minimally. You may or may not experience this as “noisy neighbors” or high-impact but you will have a less performant app than if you reduce your load. If your app’s average load exceeds the yellow line it will likely experience heavier throttling and reduced performance. Heroku’s changes this year simply mean that these lines / limits are more aggressively enforced. Trust us, bad things tend to happen when you cross these lines!

If your app currently lives above these lines and you’re experiencing noisy neighbor problems, the answer is before you: reduce your dyno load. If this is a web process, you’ve likely got too many requests for the endpoints they’re hitting. If it’s a background process, you may be pushing too many jobs to that dyno. We agree with Heroku's own suggestions for lowering your dyno load:

Many applications have a way to tune the number of threads or processes that their application is attempting to use. Different languages have different performance characteristics, for instance, Ruby and Python have a GVL/GIL that prevents concurrent execution of program code by multiple threads. In general, if you are above the listed load, you will want to decrease process and/or thread counts until your application is under the given value.

The most universal answer to quickly lowering dyno load is to reduce your thread and/or process count.

We consider this process to be optimization because it both helps your app to not be the noisy neighbor (and thus be hurt by throttling) but also ensures that your app has a little more processing headroom just in case another container decides to become your noisy neighbor. Keeping your load average between 0.6 and 0.9 tends to be the right balance between headroom and performance.

Mitigation

Unfortunately, while well-optimized apps tend to be less impacted by noisy neighbors, they will still face neighbor noise to some degree. Also unfortunately, there's almost nothing you can do when these instances crop up. Heroku gives us no tooling for managing our slices of shared resources 🙁.

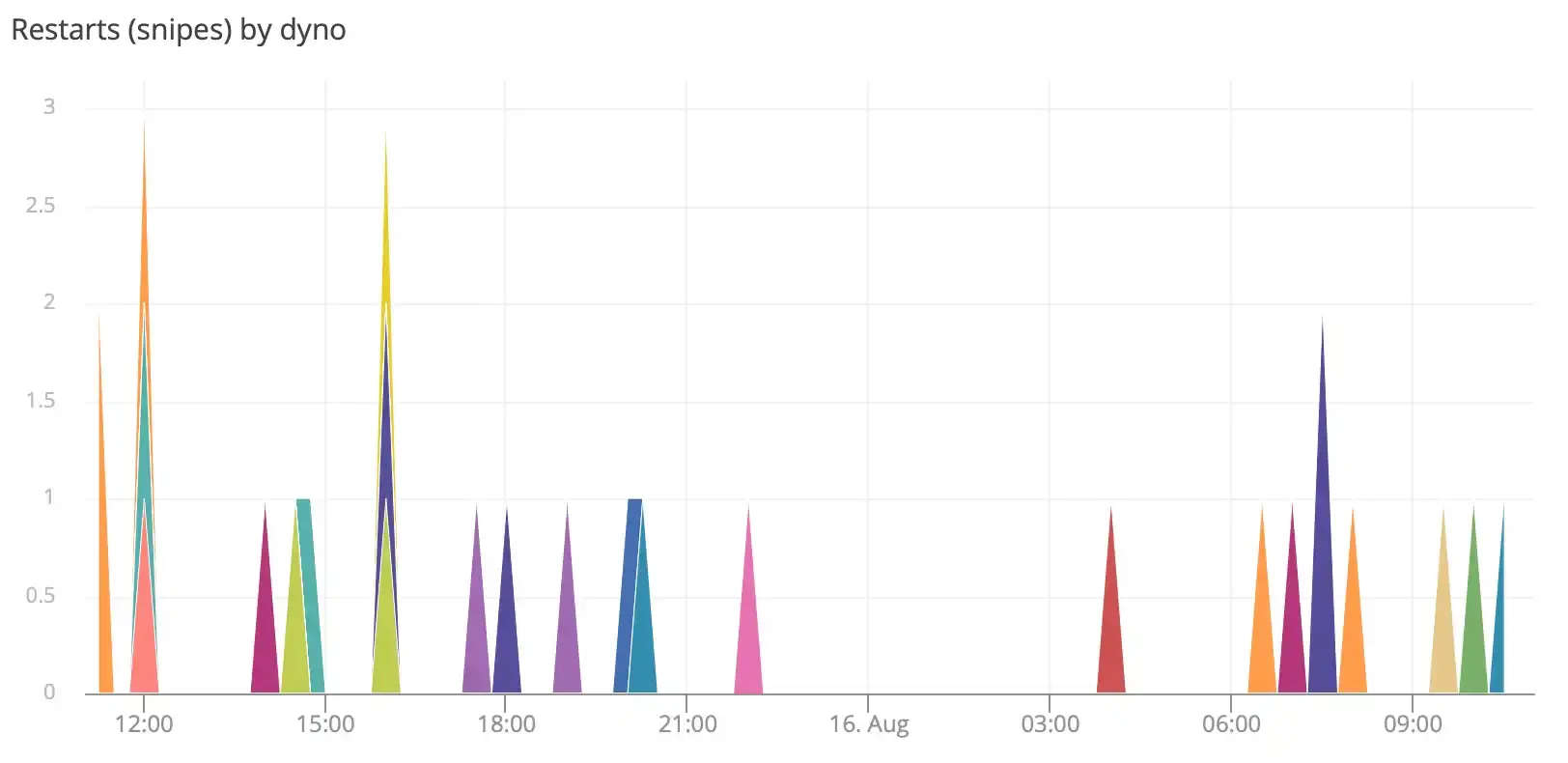

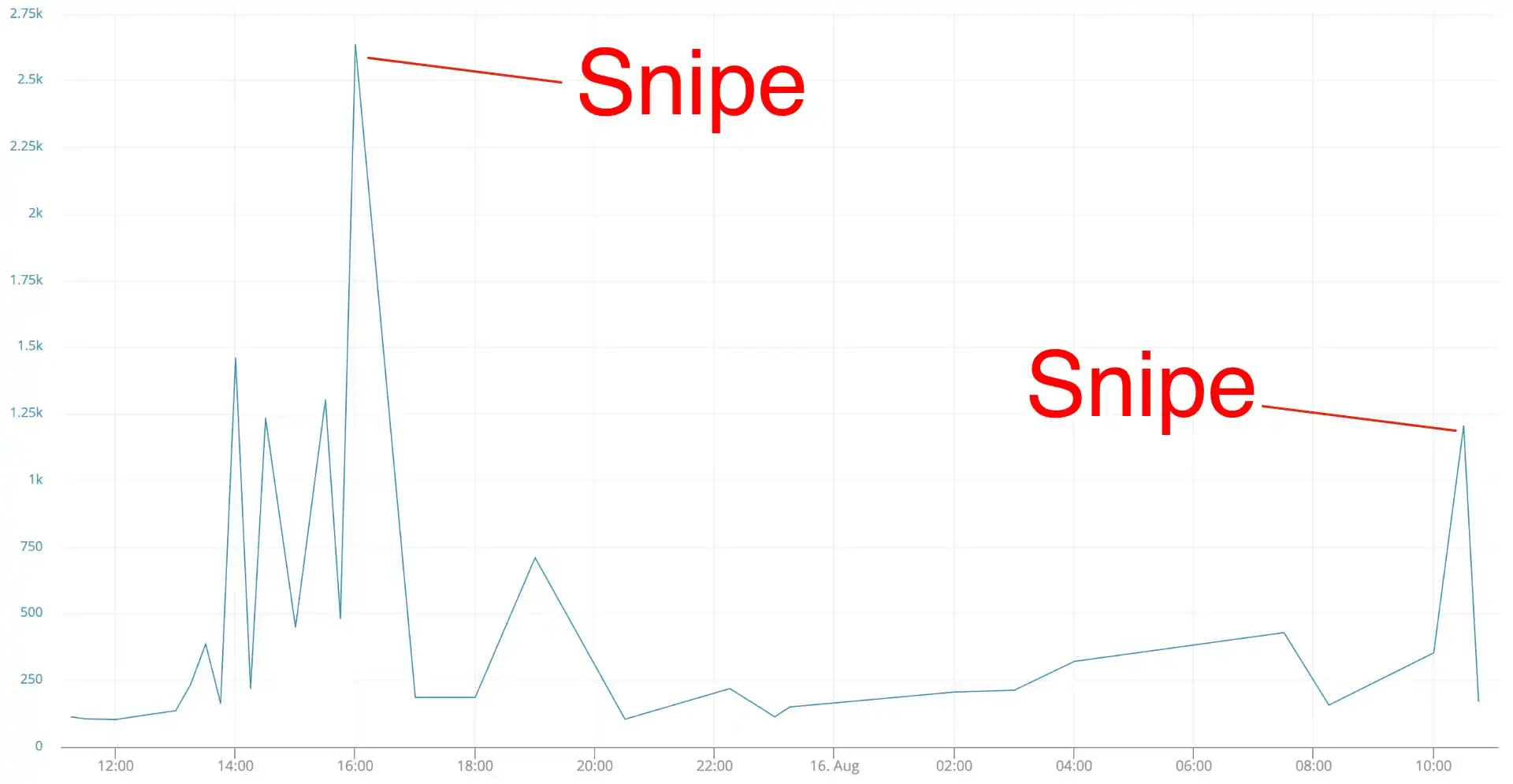

We grew annoyed by this reality, so we built a feature into Judoscale called The Dyno Sniper. The idea is that Judoscale watches for individual dynos reporting metrics outside of the app's typical measurements. If a dyno seems out-of-norm, it gets sniped! Essentially we built a way to sniff out dynos being impacted by noisy neighbors and kill them, causing replacement dynos to be spun up on a new server. We've been running this new feature on our own production applications for several months now with great impact — noisy neighbors happen more often than you might think. Here's our last 24 hours. Over 20 snipes!

And here's the metrics for one of our dynos during that time:

Take that, noisy neighbors! We've got an escape hatch out of your noise! Now we can mitigate noisy neighbor issues in seconds rather than waiting and hoping that said neighbor stops hogging all the CPU! Now we're talking!

A couple of notes, though. The Dyno Sniper feature is still very much an early beta feature on Judoscale. We've been running it successfully for a while ourselves but if you're interested in enabling it for your application, please let us know. In particular we'd be interested in users that are experiencing noisy neighbor issues under a fairly constant load and have APM systems capable of revealing per-dyno metrics. Applications running at least 5 dynos (of any type) make for the best candidates for sniping.

Check out the The Dyno Sniper in real-time:

(Auto) Scale

Alright, so you've lowered your Dyno Load to reasonable numbers, you've got sniping enabled, and you're feeling good about getting the best possible performance and experience you can out of Heroku. What's left? Autoscaling.

Picture it this way: you've got several dynos running throughout the day. For reasons we can't guess, some randomly become less performant thanks to noisy neighbors. At the same time, The Dyno Sniper is sniping certain low-performance dynos, causing them to be unavailable for a short time while they restart on new hardware. Added to all that, you've audited and brought down your Dyno Load to make sure that noisy neighbors have as little impact as possible. That's a lot of moving parts! Between the lower Dyno Load and the potential for more than one dyno to be temporarily halted, your app's maximum traffic capacity may be in constant flux — not to mention that the traffic itself is likely in constant flux! We need to be autoscaling.

To put it shortly, The Dyno Sniper + Dyno Load efficiency will ensure that the quality of each dyno you have running is up to par, but autoscaling will ensure that the number of dynos you have running at any given time is appropriate for the volume of traffic you're receiving. Missing either of these pieces would result in an application with great dynos, but not enough of them — or plenty of dynos, but none of them particularly performant.

The good news is that installing and running Judoscale is easier than ever and The Dyno Sniper comes at no extra cost. Judoscale will dynamically scale your app according to your request (or background job) queue time all day long:

It's the kind of set-it-and-forget-it tool that just works. And that's the same ethos we built into The Dyno Sniper.

Wrap it Up

Okay, quick recap. First, noisy neighbors will always be a thing on shared hardware — it's technically unavoidable. But in all real sense and practice, we can totally solve the problem. Second, Heroku made changes this year that enforce their dyno resource limits more aggressively. We need to stay aware of that in how we program our application's resource footprint! Third, it can be tricky to even identify if you're having a noisy neighbors issue. Definitely worth spending some time with an APM that can break things down for you.

With those points out of the way, the recipe for (essentially) fixing your noisy neighbors problem is this:

- Optimize your app's Dyno Load footprint. Aim for 0.6 - 0.9 in your average load. Don't breach 2.0 in your max load if you can help it.

- Mitigate noisy neighbor events when they occur with The Dyno Sniper. This will make sure that the dynos you're paying for are giving you the resources you need.

- Autoscale your dynos based on queue time. This guarantees that you won't have a capacity problem even amidst dyno churn.

These steps take a bit of time, but they will rid you of your noisy neighbor concerns!

Need help? Have questions? Want to talk more about noisy neighbors? Judoscale is a tiny team of two and we read every email that comes our way — no need to be a customer of ours. Give us a shout and we’ll do our best to help you!